Meta Split Test Set up with a Sandbox

Step by step guide to setup Meta split test (A/B or multi-cell) with a sandbox

Sandbox Setup

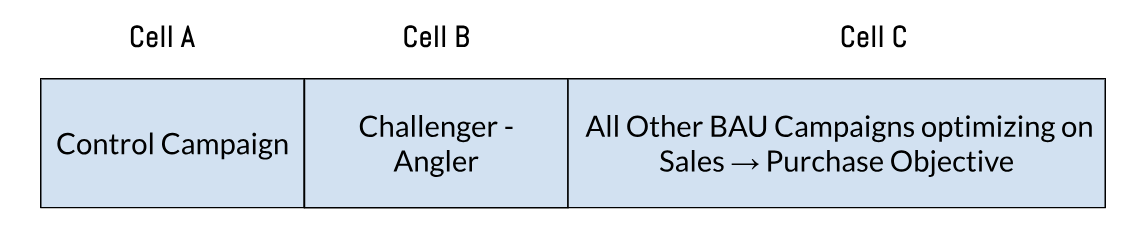

When running a split test (A/B or multi-cell) in Meta, the platform automatically ensures that audiences within the test cells (A/B/C, etc.) are non-overlapping. However, those same test cell audiences may still overlap with other business-as-usual (BAU) campaigns running outside of the test.

If your goal is to make the split test audiences completely non-overlapping with BAU campaigns, we recommend implementing a sandbox design. In this setup, you create an additional “sandbox” cell (or a third cell, if running an A/B test) where all BAU campaigns with Sales → Purchase objectives are placed.

This structure ensures that both the control and challenger (Angler) test cells remain fully isolated from BAU campaigns—eliminating audience overlap and enabling a clean, reliable in-platform readout of test results.

Set up Split Test with a Sandbox

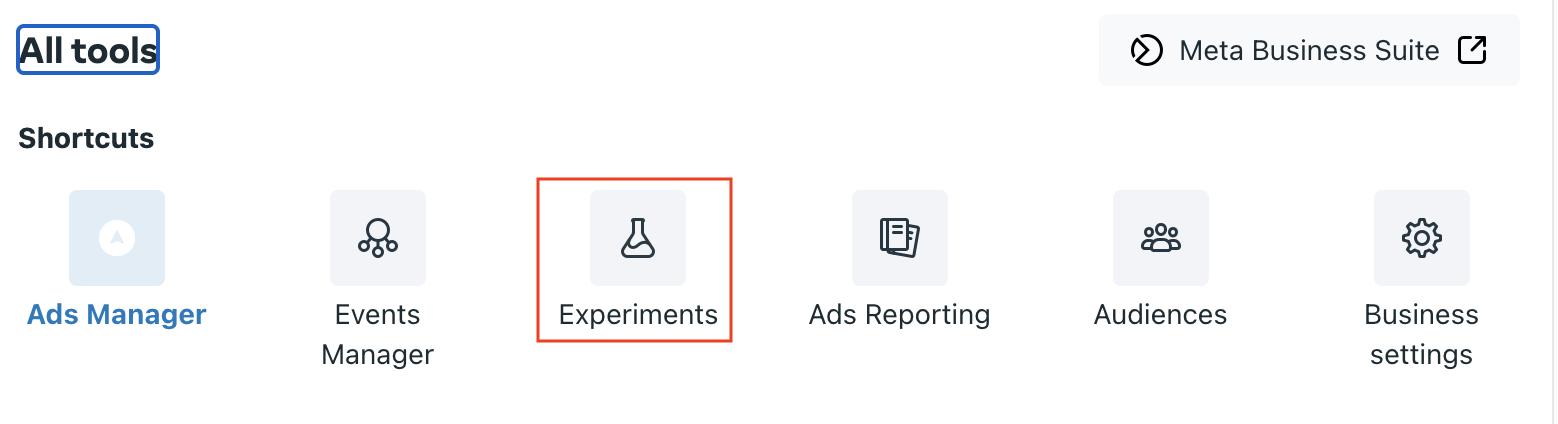

Inside your ads manager go to All tools > Experiments

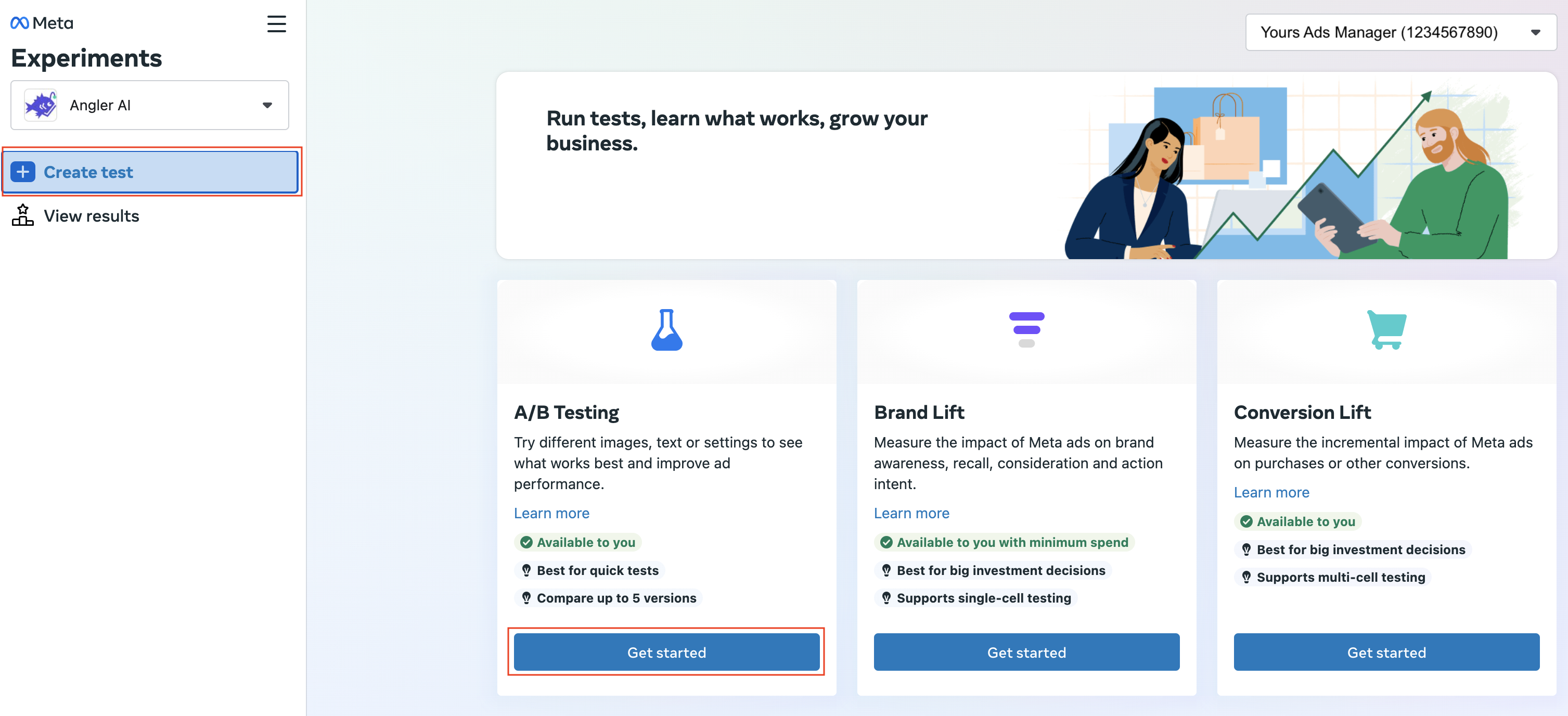

and from there start a new A/B tests by selecting Create test > A/B Testing Get Started.

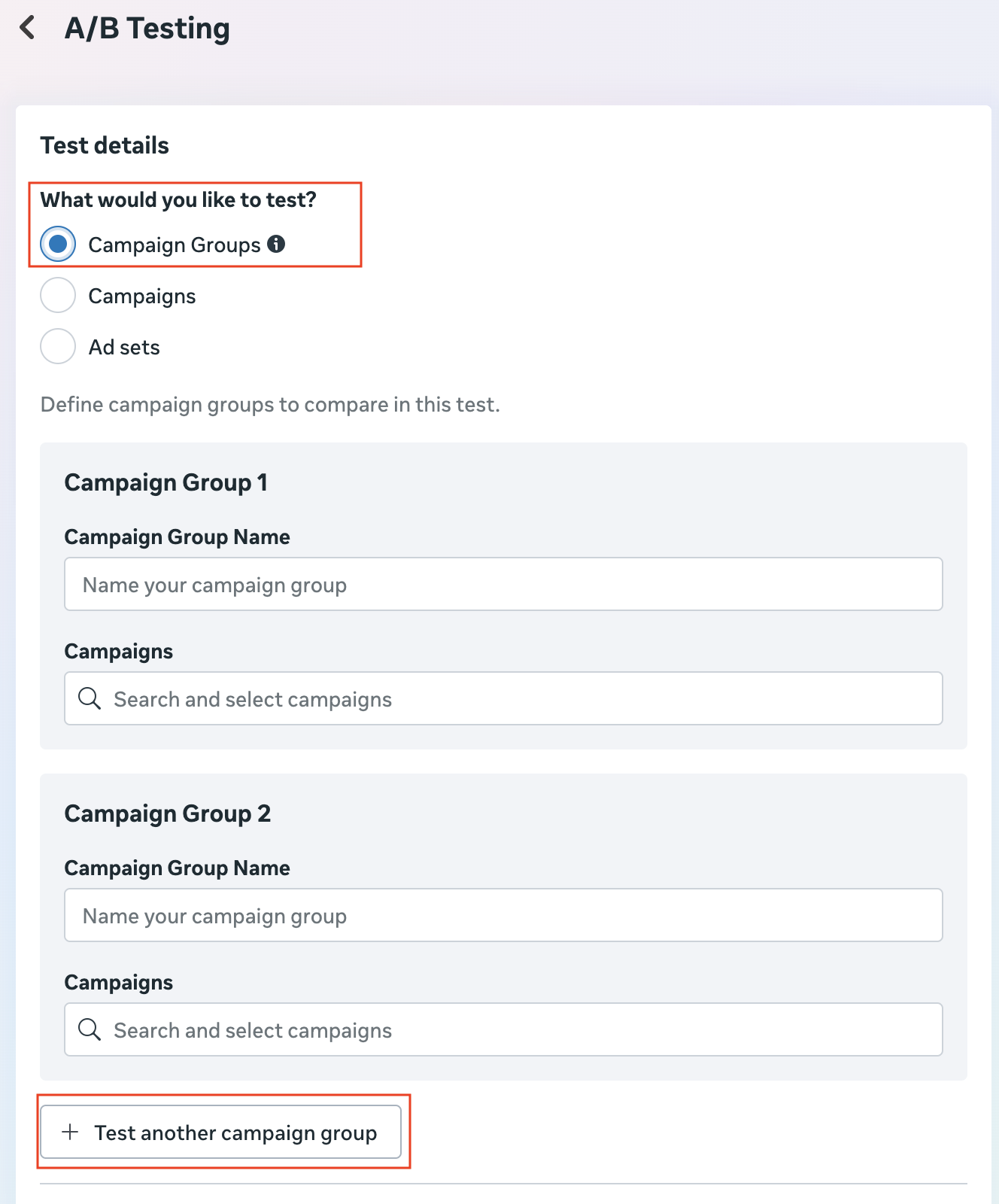

Because your sandbox will include multiple active campaigns, select Campaign Groups when asked, “What would you like to test?”

Since the sandbox requires an additional test cell (e.g., a third or fourth cell, depending on the number of test cells you’re running plus one for the sandbox), choose Test another campaign group to create this extra Campaign Group.

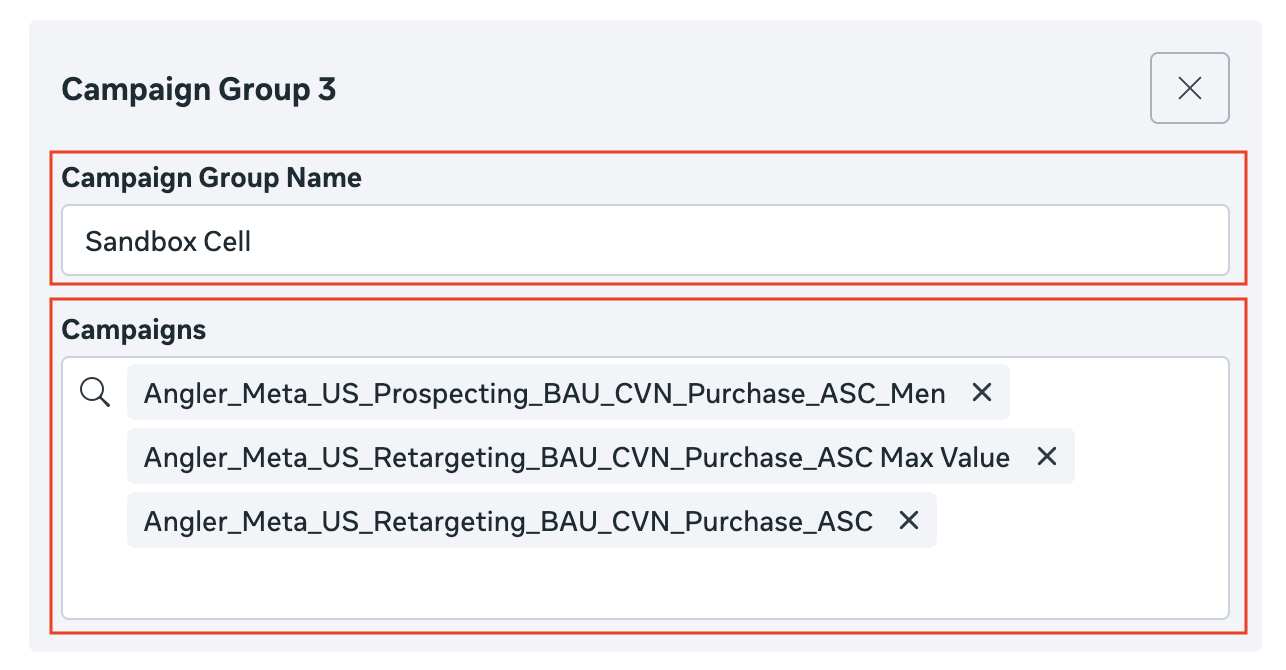

For your sandbox cell, please make sure you identify the campaign group name as sandbox so that you can identify them inside the test results easily, and discard any metric associated with the sandbox cell for your decisioning.

We recommend the following settings:

- Test name : give it a descriptive name that will help you and your team to identify what was the objective of the A/B test;

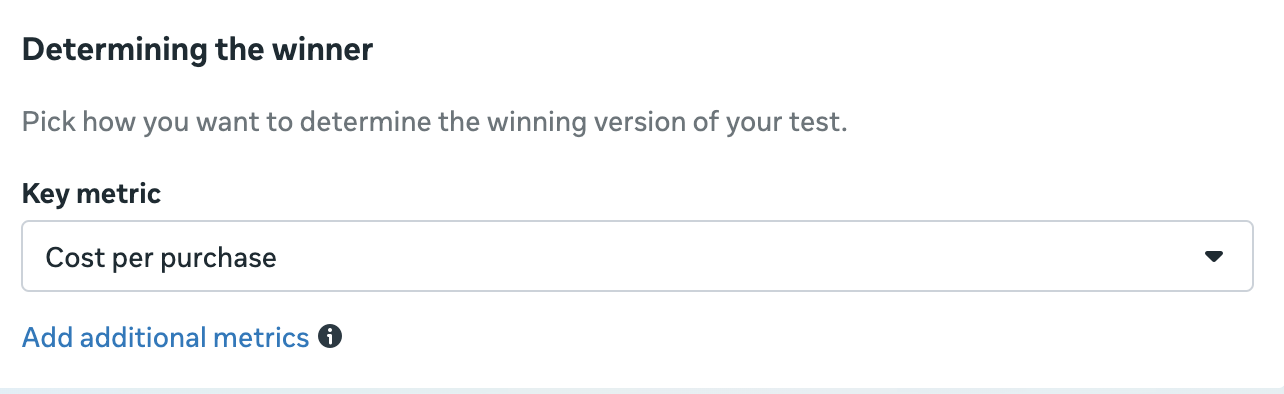

- Key Metric: select it based on your campaign objective (null hypothesis) that optimizing with Angler predictive CAPI improves (lowers) cost per website purchase;

- Toggle on Include upper funnel metrics in test report; these metrics gauge user activities that precede your key metric. They can provide insights when your key metric can not determine a winner. For instance, a test may not find a winner using cost per purchase as the key metric. But if it found a top performer based on cost per add-to-cart, you could use that information to make decisions;

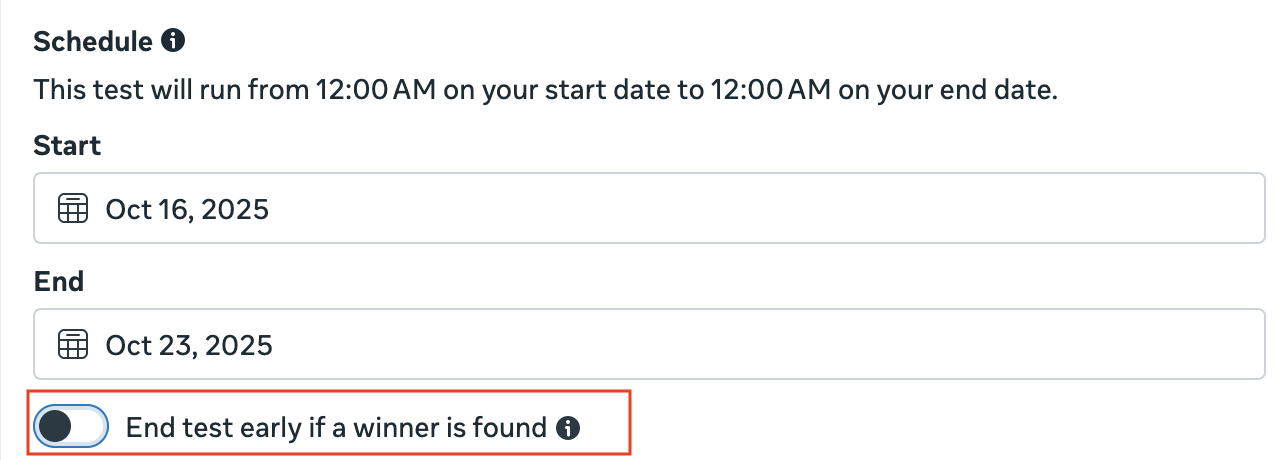

- Start: start date of the test, if you are ready to launch, earliest the test launches next day mid-night;

- End: end date of the test, we recommend a 2-week test;

- Toggle off End test early if a winner is found option.

When done, publish the test. Good luck!

Sandbox campaigns with different attribution window/model

If the attribution window or model used in your test cells differs from that of your sandbox campaigns, you may need to adjust your key metric to an upper-funnel objective (for example, you might not be able to select Cost per Purchase as the key metric).

That’s completely fine — this configuration still ensures that audience delivery across all cells remains non-overlapping. Your Angler team can then calculate statistical significance outside the platform using Ads Manager–reported metrics.

Interpretation of Results

When reviewing results, exclude metrics from the sandbox cell. Determine winners based solely on the relative performance of the test cells.

Updated 4 months ago